Jakuzure: Simple Photo Album Tools

Introduction

Jakuzure is a package of simple tools to manage your photo albums on your computer. With them, you can convert and organize your photo data flexibly according to your own needs. You can also run your own Web service to manage your photo album via Web browsers.

I assume that you take photos routinely and save them on your local storage and/or a cloud storage over Internet. You may make raw images and develop them with software like Adobe Lightroom. Or, you may make JPEG images on your cameras. Either way, you'll import your image files on your computer to save, view, and share them with your friends and relatives.

You save images on your own hard disk or semiconductor memory storage. However, any hardware is vulnerable for crush by which you lose your precious images forever. To avoid it, you can make second backups on other devices. Nevertheless, what happens if a flood, fire or earthquake destroys your house? Don't your kids, cats and thieves aim at your computer? Thus, it is desirable to make backups on a cloud storage too. It is much safer although it takes some money monthly. As your data accumulates gradually, the running cost would also increases.

One solution is to use a free service like Google Photos, on which you can save unlimited amount of data as long as each file meets its constraints. On the free storage of Google Photos, the number of pixels of each file must be 16 million or less. The size of the file must be up to 50MB. Moreover, if you upload JPEG images, the server implicitly compresses them again, which leads to degradation of image quality. A good news is that if you upload TIFF files, such re-compression doesn't happen. Therefore, utilizing free storage of Google photo for backup purapose is a practical solution even if you are paticular to image quality.

Here's my suggestion. You save your photo data on both your local storage and Google Photos (or something like that). After you import or develop image files in TIFF or JPEG format, you'll save them as-is so that they keep the best image quality. Next, you'll upload them with some alteration to reduce the size of the image data so that each file can meet restriction of the cloud service.

The script "jkzr_proc" is a tool to do that alteration. It runs on your computer and convert the image files into small ones while keeping the image area and quality as much as possible. Let's say, you have 16-bit TIFF files developed on Lightroom. The number of pixels is 24 million and the file size is 137MB, both of which far exceed the limit of Google Photos. So, you must reduce both the area and the file size. One solution is to make a TIFF file of 16 million pixels with 8-bit color depth. As one pixel is composed of 3 (R,G,B) channnels of 1 byte respectively, 16 million * 3 * 1 ~= 48MB is the file size. However, although TIFF is not subject of re-compression, 8-bit color depth is not great for current technology where raw file records 14-bit color and dynamic range of digital cameras has reached 15 EV. Instead, I suggest you to upload images of 12 million pixels in 12-bit color depth. TIFF has loss-less ZIP compression feature whereby you can make less than 50MB data with those high quality parameters.

As there's no guarantee that ZIP reduces the data size as expected, jkzr_proc does file generation on try-and-error basis. If the first attempt makes a file whose size exceeds the 50MB limit, it reduced the color depth and try again until the size meets the limit. My huristic says that attempts with 12 million pixels in 12-bit color with ZIP compression succeed in more than 95% of cases. The remaining 5% cases fall to 10-bit color. If the area parameter is too lax and the file size exceeds the limit even in 8-bit color, the area is reduced gradually until the file size meets the limit. The point is, all tedious tasks are done automatically. All you have to do is to kick jkzr_proc and upload the result to Google Photos. It is not practical to adjust color depth manually. And, it is cumbersome to resize the image while keeping aspect ratio and maximizing the area.

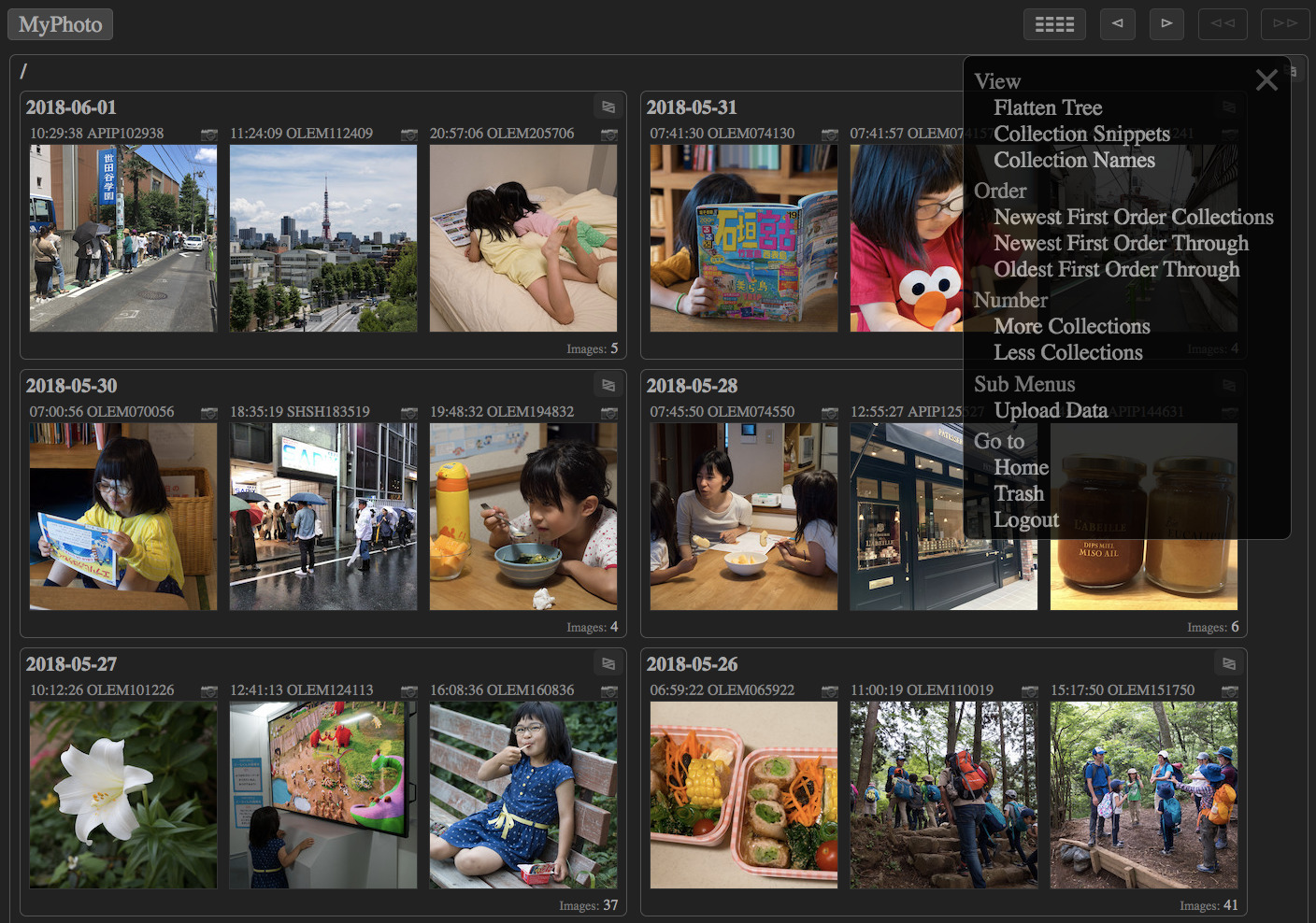

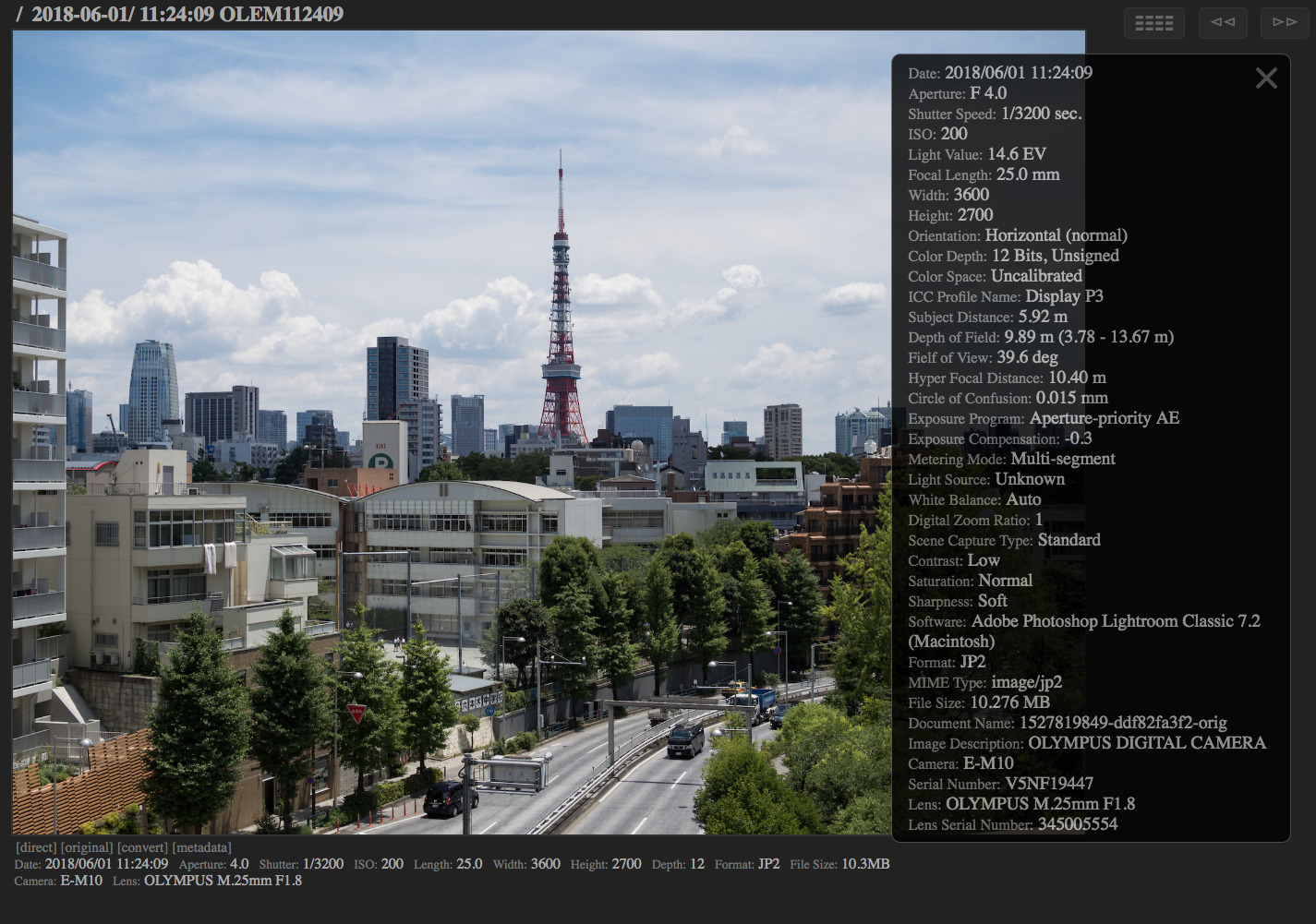

Whereas cloud services are convenient for you and your friends to browse your photos, you also want to browse the image files stored on your computer because the best quality files are there. The script "jkzr_list" is a tool to realize it. It works as both a command line tool and a Web service. You can use your Web browser to search your photo album and see slide shows. Its user interface is optimized for easy access from mobile devices and quick control from desktop computers with keyboards. It also has features to manage the album. You can upload new images to the album and delete images from the album.

Let's say, you utilize Lightroom to handpick good photos which deserve to be saved on the storage and browse later. It is important to choose only good-enough photos because crawling among mediocre photos wastes your precious time and they consume storage space unnecessarily. At the same time, it is also important to keep seemingly good photos too because they might become favorite for you after a month, year, or decade. If you can easily delete some of them anytime, you can easily keep uncertain candidates too. The most important feature of jkzr_list is a seamless interface of browsing and deletion. You'll browse your photos one month after you took them. If you feel that some of them don't deserve to be seen again even after a month of shooting, you don't hesitate to delete them. Removed images are actually moved to the trash, you can restore them later until you clean the trash.

Try the demo site to know how it feels. As the sample data sets are restored in every three hours, you can also delete some of them as you like. Take a look at the help page for all features.

Installation

You must have a UNIX-like system including Mac OS X and Linux, or Windows. You must install Python (3.3 or later), ImageMagick (6.9 or later), and ExifTool (10.8 or later). Consult instructions of each software for installation. If you want to handle raw image files too, install Dcraw (8.25 or later) too. If you want to handle video files too, install FFmpeg (3.4 or later) too. If you want to enable dynamic range optimization, install Enfuse (4.2 or later) too.

Downlaod this archive file and expand it.

$ unzip jakuzure.zip

You'll see "jkzr_proc" and "jkzr_list" in the expanded directory "jakuzure". They are command line tools so give them executable permission and install them under a directory for commands (like "/usr/local/bin"). On Mac OS, "python3" is installed under "/usr/local/bin". So, modify the first (shebang) line "#! /usr/bin/python3" of each script file to be "#! /usr/local/bin/python3".

$ cd jakuzure $ chmod 755 jkzr_proc jkzr_list $ sudo cp jkzr_proc jkzr_list /usr/local/bin

There are also ICC profile files which are used by jkzr_proc to treat color profile. Install all ICC files under "/usr/local/share/color/icc".

$ sudo mkdir -p /usr/local/share/color/icc $ sudo cp icc_profiles/*.icc /usr/local/share/color/icc

Check whether installation was done successfully.

$ jkzr_proc --config

You'll see configuration information like this. Cofirm that ImageMagick and ExisTools are listed.

external command: identify -- version: ImageMagick 7.0.8-14 Q16 x86_64 2018-10-30 https://imagemagick.org external command: convert -- version: ImageMagick 7.0.8-14 Q16 x86_64 2018-10-30 https://imagemagick.org external command: exiftool -- version: 11.16 external command: dcraw -- version: unknown external command: ffmpeg -- version: ffmpeg version 3.4.4-0ubuntu0.18.04.1 Copyright (c) 2000-2018 the FFmpeg developers supported format: TIFF -- extensions: tif, tiff supported format: JPEG -- extensions: jpg, jpe, jpeg supported format: JPEG 2000 -- extensions: jp2, j2k supported format: PNG -- extensions: png supported format: GIF -- extensions: gif supported format: BMP -- extensions: bmp supported format: WEBP -- extensions: webp supported format: HEIC -- extensions: heic supported format: MP4 -- extensions: mp4, mpeg4 supported format: MOV -- extensions: mov, qt supported format: AVI -- extensions: avi icc profile: srgb -- path: /usr/share/color/icc/sRGB_IEC61966-2-1_black_scaled.icc icc profile: adobe-rgb -- path: /usr/share/color/icc/Adobe-RGB.icc icc profile: display-p3 -- path: /usr/share/color/icc/Display-P3.icc icc profile: prophoto-rgb -- path: /usr/share/color/icc/ProPhoto-RGB.icc icc profile: rec-709 -- path: /usr/share/color/icc/Rec-709.icc icc profile: rec-2020 -- path: /usr/share/color/icc/Rec-2020.icc Maximum area for normal color: 16000000 -- maximum size for 1:1: 4000x4000 = 16000000 -- maximum size for 5:4: 4440x3552 = 15770880 -- maximum size for 4:3: 4560x3420 = 15595200 -- maximum size for 7:5: 4620x3300 = 15246000 -- maximum size for 3:2: 4800x3200 = 15360000 -- maximum size for 8:5: 4992x3120 = 15575040 -- maximum size for 16:9: 5184x2916 = 15116544 -- maximum size for 2:1: 5600x2800 = 15680000 Maximum area for high color: 10000000 -- maximum size for 1:1: 3100x3100 = 9610000 -- maximum size for 5:4: 3500x2800 = 9800000 -- maximum size for 4:3: 3600x2700 = 9720000 -- maximum size for 7:5: 3696x2640 = 9757440 -- maximum size for 3:2: 3780x2520 = 9525600 -- maximum size for 8:5: 4000x2500 = 10000000 -- maximum size for 16:9: 4160x2340 = 9734400 -- maximum size for 2:1: 4400x2200 = 9680000

If you want to use jkzr_list as a Web service, you must run a Web server which can run CGI scripts. Consult documents of the Web server to configure it. How to configure jkzr_list as a CGI script is explained later. If you don't want Web features, you don't have to care about it.

jkzr_proc

jkzr_proc is a batch script to convert multiple images. You specify image files as command line parameters. By default, each image is copied without any modification. The output is saved under the directory you specified by "--destination" flag. Let's say, you have TIFF image files made by Lightroom under "/home/you/developed". You convert them into JPEG and output them into "/home/you/converted".

$ mkdir -p /home/you/converted $ jkzr_proc /home/you/developed/*.tif \ --destination /home/you/converted \ --output_format jpg

If you want to covert images into JPEG whose pixels are equal to or less than 16 million, you will specify it by "--max_area" flag. Usually, you also specify "--unsharp" flag to apply unsharp mask so that the shrunk image keeps crisp.

$ jkzr_proc /home/you/developed/*.tif \ --destination /home/you/converted \ --output_format jpg \ --max_area 16m \ --unsharp 0.7 0.5

Whereas "--max_area" flag is for such low-color images in 8-bit depth as JPEG, "--max_area_high" flag is for high-color images in more than 8-bit depth. Therefore, if you want to shrink 16-bit TIFF images to be equal to or less than 16 million pixels, specify "--max_area_high" flag. If you omit "--output_format" flag, the output format is the same as the input.

$ jkzr_proc /home/you/developed/*.tif \ --destination /home/you/converted \ --max_area_high 16m \ --unsharp 0.7 0.5

If you want to upload your images to Google Photos, set "--prepare_for_googlephotos" flag, which is an alias of "--max_area=16m", "--max_area_high=12m", "--max_size=50mi", "--max_depth=12", and "--output_format=tif". That is, any input image including TIFF and JPEG are converted to TIFF in order to avoid re-compression on Google Photos. JPEG and 8-bit TIFF are reduced to 16 million pixels to meet the restrictions of Google Photos. 16-bit TIFF is reduced to 12 million pixels and the color depth is limited to 12-bit so that the output image in loss-less compressed TIFF format meets the 50MB limit of Google Photos.

$ jkzr_proc /home/you/developed/*.tif \ --destination /home/you/converted \ --prepare_for_googlephotos \ --unsharp 0.7 0.5

Note that jkzr_proc is much more versatile. It can convert image formats from/to JPEG, TIFF, JPEG 2000, PNG, GIF, BMP, WEBP, and HEIC. Fundamental image processing functions like trimming, level correction and gamma correction are implemented. Development of raw images is also supported. Running it with "--help" shows more information.

$ jkzr_proc --help

Next, let's consider to organize your photos to store them on your final storage, parhaps a hard disk. Let's say, you store photos under "/media/photo_disk/album". Now that you want to keep the image intact without any conversion, you don't set any reduction flags. Meanwhile, set "--date_subdir" to make sub directories named after the shooting date like "2018-10-07". To clarify shooting time, set "--timestamp_name" too. To preserve the original file names, set "--inherent_name" too. To prevent collision of file names of the same timestamp, set "--unique_name" too. As a result, names like "2018-10-07/20181007181924-PX10235-b18c383e" are given. Finally, set "--catalog" to generate catalog files, which are used by jkzr_list.

$ jkzr_proc /home/you/developed/*.tif \ --destination /media/photo_disk/album \ --date_subdir --timestamp_name --inherent_name --unique_name \ --catalog

If the number of imput image files are too many, spacifying them with command arguments is unreasonable. Then, utilize the "find" command and read the result from the standard input by setting "--read_stdin" flag.

$ find /home/you/developed -type f -print |

egrep -i '\.(tif|jpg)$' |

jkzr_proc --read_stdin \

--destination /media/photo_disk/album \

--date_subdir --timestamp_name --inherent_name --unique_name \

--catalog

If you specify "--catalog" flag, the following files are generated for each image file. There's neither database nor configuration files so you can simply remove and rename them as long as the naming convention is kept.

| Type | File Name | Role |

|---|---|---|

| Data File | *-data.* | Image data file with highest quality |

| Metadata File | *-meta.tsv | TSV text file including metadata of EXIF tags etc. |

| View Image File | *-view.jpg | Reduced JPEG image data for viewing on browsers |

| Thumbnail File | *-thumb.jpg | Thumbnail JPEG image data for listing on browsers |

After you develop or import images on your PC, you can upload them to Google Photos with least degradation of image quality. You can also organize images on your hard disk and make catalog data in order to browse them locally and remotely.

jkzr_list

jkzr_list is a script to search an album directory created by jkzr_proc with "--catalog" flag. It's like the "find" program for the catalog. It works as a command line tool and also a CGI script. Firstly, let's see how to use it as a command tool. You speicfy the location of your album directory by "--album_dir" flag.

$ jkzr_list --album_dir /media/photo_disk/album

By default the root resource, whose ID is "/", is searched. Like the "ls" command, it shows child resource IDs of "/".

{

"result": {

"id": "/",

"type": "collection",

"collection_serial": 0,

"num_child_records": 0,

"num_child_collections": 21,

"num_orphans": 0,

"num_reachable_records": 0,

"items": [

{

"id": "/2017-01-07",

"type": "collection"

},

{

"id": "/2017-01-08",

"type": "collection"

},

...

},

"stats": {

"num_total_records": 0,

"num_total_collections": 21,

"num_scanned_records": 0,

"num_scanned_collections": 0,

"num_skipped_records": 0,

"num_skipped_collections": 0,

"elapsed_time": 0.0008757114410400391

}

}

As you created subdirectories named after shooting dates of photos, children's IDs are like "/2017-01-07". Let's search a child.

$ jkzr_list --album_dir /media/photo_disk/album /2017-01-07

You see resource IDs of records under "/2017-01-07".

{

"result": {

"id": "/2017-01-07",

"type": "collection",

"collection_serial": 0,

"num_child_records": 32,

"num_child_collections": 0,

"num_orphans": 0,

"num_child_images": 32,

"num_reachable_records": 32,

"items": [

{

"id": "/2017-01-07/20170107125006-OLEM125006-zz85c1c9ab",

"type": "record"

},

{

"id": "/2017-01-07/20170107125034-OLEM125034-zzc24908ab",

"type": "record"

},

...

},

"stats": {

"num_total_records": 32,

"num_total_collections": 0,

"num_scanned_records": 0,

"num_scanned_collections": 0,

"num_skipped_records": 0,

"num_skipped_collections": 0,

"elapsed_time": 0.0023162364959716797

}

}

In the context of photo album, we call a set of data for a image as a "record". And, we call a directory containing image data as a "collection". The hypernym of both "record" and "collection" is "resource". Resources in an album are organized as a tree structure which parallels to the directory structure on the storage. Note that resoruce IDs of records don't have suffixes like "-data.tif" whereas collection IDs are just the same as directory names.

jkzr_list can search collections recursively if you specify the recursion level with "--depth" flag.

$ jkzr_list --album_dir /media/photo_disk/album --depth 3 /

{

"result": {

"id": "/",

"type": "collection",

"collection_serial": 0,

"num_child_records": 0,

"num_child_collections": 22,

"num_orphans": 0,

"num_reachable_records": 313,

"items": [

{

"id": "/2017-01-06",

"type": "collection",

"collection_serial": 0,

"num_child_records": 2,

"num_child_collections": 0,

"num_orphans": 0,

"num_child_images": 2,

"num_reachable_records": 2,

"items": [

{

"id": "/2017-01-06/20170106101738-OLEM101738-zzb9c099b2",

"type": "record",

"record_serial": 0,

"media": "image",

"data_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106101738-OLEM101738-zzb9c099b2-data.jp2",

"metadata_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106101738-OLEM101738-zzb9c099b2-meta.tsv",

"viewimage_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106101738-OLEM101738-zzb9c099b2-view.jpg",

"thumbnail_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106101738-OLEM101738-zzb9c099b2-thumb.jpg"

},

{

"id": "/2017-01-06/20170106102151-OLEM102151-zz107e0a8a",

"type": "record",

"record_serial": 1,

"media": "image",

"data_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106102151-OLEM102151-zz107e0a8a-data.jp2",

"metadata_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106102151-OLEM102151-zz107e0a8a-meta.tsv",

"viewimage_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106102151-OLEM102151-zz107e0a8a-view.jpg",

"thumbnail_url": "file::///home/mikio/public_html/myphoto/album/2017-01-06/20170106102151-OLEM102151-zz107e0a8a-thumb.jpg"

}

]

},

...

]

},

"stats": {

"num_total_records": 313,

"num_total_collections": 22,

"num_scanned_records": 313,

"num_scanned_collections": 22,

"num_skipped_records": 0,

"num_skipped_collections": 0,

"elapsed_time": 0.06680583953857422

}

}

As you see, the output of jkzr_list is in JSON format. Therefore, if you call it from a Web server, you can easily implement a Web API. If you configure jkzr_list as a CGI script, it works as a Web service. You can write your own clients working on browsers or as native applications of some operating systems. Actually, jkzr_list implements client features too so you don't have to write anything. Let's see how you configure it.

CGI Script

You run a Web server like Apache, Lighttpd, and NGINX, enabling CGI scripts. You should confirm the following requirements. With Apache, you might struggle with the SuExec feature to run CGI scripts as arbitrary users, and it would be tough. I recommend you to run a dedicated server with a user account which can read/write the album directory.

- The effective user running the CGI script has permission to read and write the album directory.

- The "PATH" environ covers directories containing "jkzr_proc", "exiftool", and "zip".

- If you use non-ASCII file names, the "LC_ALL" environ must set appropriately.

I don't dig into security topics here because the requirement varies depending on server, security policy, and contents. Users who can access the CGI script via network can see all data in the album. So, its important to control the accessibility to the CGI script as well as the image files which are published by the server directly.

Let's say, you have a directory "/photo_server/public" where both CGI scripts and image data files to be published are put. Then, you'll copy jkzr_list as "/photo_server/data/list". And, make a symbolic link to the album directory.

$ cp /usr/local/bin/jkzr_list /photo_server/public/list $ ln -s /media/photo_disk/album /photo_server/public/album

If you use Apache, you'll put ".htaccess" under "/photo_server/public" in order to run the copied "list" file as a CGI script.

Options +ExecCGI <Files list> SetHandler cgi-script </Files>

If you enable the upload feature, you have to prepare a spool directory where uploaded files are stored.

$ mkdir /photo_server/spool

Let's say, files under "/photo_server/public" is accessible as "https://yourdomain/photo/...". Then, the CGI script is accessible as "https://yourdomain/photo/list" and the photo data files are accessible as "https://yourdomain/photo/album/...". This means that every image data under the album directory is accessible if the user know the URL. That's another reason why you set "--unique_name" flag when you create the catalog. The suffix is randomly generated and it's hard for the third party to predict the suffix. Of courese, you must not enable "Indexes" feature of the Web server.

To control accessibility, you can use any of basic authentiation, digest authentication, TLS client authentication, and form authentication implemented by the CGI script. Or, you might not need any authentication if you run the sever within your intranet. Let's say, you choose the form authentication and set the password "abc". You generate a hash string of the password.

$ jkzr_list --generate_hash "abc" 900150983cd24fb0d6963f7d28e17f72

Edit the copied "list" file to set the password and where the album directory is. Modify the following constants in the script.

# Local absolute path of the album directory. ALBUM_DIR = "/photo_server/public/album" # Local absolute path of the spool directory. # Empty string disbles the upload feature. SPOOL_DIR = "/photo_server/spool" # Absolute URL of ALBUM_DIR. # Empty string means "album" in the same directory as the script. URL_PREFIX = "https://yourdomain/photo/album" # Password hash for CGI script. # Empty string disbles the form authentication. CGI_PASSWORD_HASH = "900150983cd24fb0d6963f7d28e17f72"

Now, you can access the CGI script from a Web browser. Confirm that you can see a list of photos by accessing "https://yourdomain/photo/list". Enjoy features which I think convenient for photo enthusiasts as well as common people.

For tech people, another way to retrieve data is to use a command line tool like "curl". When accessed without specifying the HTTP hader "Accept: text/html", the CGI script returns JSON data rather than XHTML5.

$ curl "https://yourdomain/photo/jkzr_list/?password=abc"

If you enabled the password feature, client must send the password at the first access. The response includes a cookie named "session_id". After that, the client can use the cookie to substitute the password for 30 days.

The CGI script provides API with RESTful entry points. The resource ID to search is specified as the path info of the URL. For example, if you search "/2018-01-07", you use the URL "http://yourdomain/photo/list/2018-01-07". Other query conditions are set as parameters of the query string like "?depth=3&max_records=100". You can use the same parameter as the command line tool so refer to the help messages of the "--help" flag.

Other RESTful entry points are like these. If you update (remove, restore, or edit) a resource, you must add the session ID as a parameter named "auth_token". It is required to prevent CSRF attacking.

- DELETE /foo : Remove the resource "foo".

- POST /foo (with parameter "remove=1") : Remove the resource "foo".

- GET /__trash__ : Search the content of the trash.

- POST /__trash__/foo (with parameter "restore=1") : Restore the resource "/__trash__/foo".

- POST /__edit__/foo : Set metadata of "/foo". It takes parameters like "edit_set_date=on&edit_date=2018-10-11T18:30:02".

- GET /__zip__/foo : Get ZIP archive data under "/foo".

- GET /__convert__/foo : Convert the image of "/foo" and get the result. It takes parameters like "convert_format=png&convert_resize=1600".

Advanced Features

If Dcraw is installed on the system, jkzr_proc handles raw image files of many digital cameras (DNG, CR2, NEF, ARW, ORF, RW2, PEF, RAF) too. When making catalog of a raw file, the original data is saved intact and a viewing JPEG image and a thumbnail JPEG image are generated automatically. I recommend you to save raw data of a few very best photos as well as TIFF/JPEG developed data. You can install "tca.tsv" and "distortion.tsv" in the package under "/usr/local/share/jkzr" so that transverse chromatic aberration and distortion aberration are corrected with lens profiles. You can input raw image files even if Dcraw is not installed. In that case, they are treated as binary generic binary files.

If FFmpeg is installed on the system, jkzr_proc handles video files (MP4, MOV, AVI) too. When making catalog of a video file, the original data is saved intact and a viewing JPEG image and a thumbnail JPEG image are generated automatically. It's convenient to store still images and videos at the same catalog. You can input video files even if FFmpeg is not installed. In that case, they are treated as generic binary files.

If Enfuse is installed on the system, you can enable dynamic range optimization (DRO), which is useful to make natural but impressive images. DRO is also called pseudo-HDR whereby one image is converted into three images: under-exposed image, normal-exposed intact image, and over-exposed image. They are merged using a specific tone mapping technique to make every part of the merged image well-exposed. You specify the strength of DRO by "--dro" flag. 4 is a well-balanced parameter. Meanwhile, auto exposure adjustment is also useful to make attractive images which preserves impression of the original images. Auto exposure adjustment reads EXIF metadata to compure the exposure value of the input image and adjust brightness of the output image. You can specify the strength of auto exposure adjustment by "--auto_adjust" flag. 1.6 is a well-balanced parameter. If you make catalog files and just want to process the view images by those features, you can use "--catalog_dro" and "--catalog_auto_adjust" flags.

My Usage

I take many photos and a few videos daily. I have a cheap rental VPS (virtual private server) connected to Internet and share my photo on it with my family members and relatives. This software was developed for that purpose. Let's see how I use this actually.

I develop raw photos on Lightroom on a Macbook and export them as 16-bit TIFF with the Display P3 color profile. I edit videos on Quick Time Player or iMovie. Both exported images and videos are saved in "$HOME/photos". Then, I upload them to the removte server by a command like this.

$ jkzr_proc \ --destination="you@example.com:jkzr/spool" \ --cleanup \ --max_area=16m --max_area_high=10m --max_size=50mi \ --intact_by_rating=5 \ --output_format=jp2 \ ~/photos/*.tif

"--destination" flag supports a SCP destination so that the output files are saved on a remote machine. "--cleanup" flag removes input files when each of them are output successfully. This is convenient because I can terminate and rerun the command casually. "--max_area", "--max_area_high", and "--max_size" flags shrink the image to spare storage space so that my cheap VPS can store files of many years. "--output_format" flag converts the image into JPEG 2000, which can reduce the file size drastically while keeping image quality high. JPEG 2000 can handle 16-bit channels so that I can retouch the image without visible color banding. 10 million pixels is good enough for displaying on a 4K monitor while the average file size is small about 10MB. With "--intact_by_rating" flags, images with rating 5 are not shrunk. I give it to only a few images a day. Moreover, if a image is super favorite, I send the raw file too. The same command can send raw files as-is.

On the remote server based on Linux, jkzr_proc is also run periodically by the cron system to make catalog data. It accepts any data formats because my spouse also sends images from her smartphone via a SFTP client. Make three directories of "$HOME/jkzr/album", "$HOME/jkzr/spool", "$HOME/jkzr/stash" beforehand. Install the following script as "$HOME/jkzr/jkzr_cron_catalog.sh" and give executable permission.

base_dir="${HOME}/jkzr"

album_dir="${base_dir}/album"

spool_dir="${base_dir}/spool"

stash_dir="${base_dir}/stash"

log_file="${base_dir}/log"

lock_file="${base_dir}/lock"

spool_num="$(find "${spool_dir}" -type f -print | wc -l)"

[[ "${spool_num}" -gt 0 ]] || exit 0

(

flock -w 10 9 || exit 1

echo "${$}" 1<&9

find "${spool_dir}" -type f -print | sort -r |

jkzr_proc \

--read_stdin --ignore_fresh --max_inputs=30 \

--destination="${album_dir}" \

--catalog --name_for_catalog \

--stash="${stash_dir}" --cleanup \

--interval 1 --ignore_errors \

--max_area=16m --max_area_high=10m --max_size=50mi \

--intact_by_label=intact --intact_by_rating=5 \

--catalog_dro=4.0 --catalog_auto_adjust=1.6 \

--log_level info <<"${log_file}" 2<&1

) 9<"${lock_file}"

"flock" command avoids overlapping execution of the script. "--ignore_fresh" flag avoids reading fresh files which might be in the process of uploading. "--max_inputs" flag limits the number of input files processed by one execution. "--destination" flag sets the album directory. "--catalog" flag makes catalog data. "--name_for_catalog" flag names output files for making a catalog manageable by jkzr_list. This is an alias of "--date_subdir", "--timestamp_name", "--inherent_name", and "--unique_name". "--stash" flag moves the input file to the stash directory before reading. "--cleanup" flag removes the input file. Combination of "--stash" and "--cleanup" ensures that only failed input files remain in the stash directory. "--interval" flag mitigates overload of the system. "--ignore_errors" flag continues the process even if some files are failed. "--max_area", "--max_area_high", and "--max_size" flags shrink image files which hasn't been shrunk. "--intact_by_label" and "--intact_by_rating" flags keep favorite images intact. "--catalog_dro" and "--catalog_auto_adjust" flags make view images pleasant although they don't modify original files. The crontab is set like this.

* * * * * /home/you/jkzr/jkzr_cron_catalog.sh

Through the above processes, images and videos I take accumurate on the remove server. I send many files and sieve them by features of jkzr_list for only "keepers" to survive. Then, I share them with family and relatives. And, I periodically (once every six months) download and save them on a local external hard disk.

$ cd /media/photo_disk/album $ scp -r you@example.com:jkzr/album/2018-0[1-6]-* .

Then, I process them to upload to Google Photos. "--prepare_for_googlephotos" flag converts images into TIFF with as highest quality as possible.

$ mkdir -p ~/googlephotos $ find /media/photo_disk/album/2018-0[1-6]-* -type f -print | egrep -i '.*-data\.(tif|jpg|jp2|mov|mp4|avi)$' | jkzr_proc \ --read_stdin \ --destination="$HOME/googlephotos" \ --prepare_for_googlephotos

I upload the output TIFF images and videos to Google Photos via a web browser. You can select multiple images on the file selection dialog by pressing the shift key and clicking the top and the bottom files.

As the storage capacity of my VPS is limited (only 200GB), I sometimes remove oldest directories on it. Even so, I still retain two backups: on the local external storage and on Google Photos.

There are templates of the above precoesses in the "script" subdirectory in the download packege so that you can reference them.

Epilogue

Photo enthusiasists make efforts to take great photos. However, the reason for you to take photos is that you want to remind the scene later. Value of the picture is determined by how (and how often) it is viewed. Therefore, preparing a good system for viewing your pictures is as good as taking them. As I developed this software for my personal use, I'd be grad if someone feels it useful and lives a happier photo life.

Jakuzure is distributed as an open source software under the MIT license. If you have questions or find bugs, please contact me <info@fallabs.com>. By the way, the name "Jakuzure" (蛇崩) comes from a culvert stream near my apartment in Tokyo. It is not related to an anime character.